- World

- Oct 08

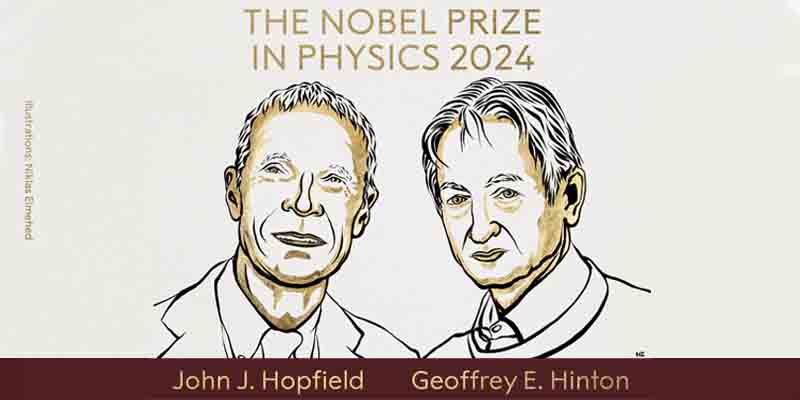

2 scientists win Nobel Prize in Physics for work on machine learning

• US scientist John Hopfield and British-Canadian Geoffrey Hinton won the 2024 Nobel Prize in Physics for discoveries and inventions that laid the foundation for machine learning.

• John Hopfield created a structure that can store and reconstruct information. Geoffrey Hinton invented a method that can independently discover properties in data and which has become important for the large artificial neural networks now in use.

• Hopfield is a professor at Princeton University, US.

• Hinton is a professor at University of Toronto, Canada.

Machine learning differs from traditional software

• The development of machine learning has exploded over the past 15 to 20 years and utilises a structure called an artificial neural network. Nowadays, when we talk about artificial intelligence, this is often the type of technology we mean.

• Although computers cannot think, machines can now mimic functions such as memory and learning.

• This year’s laureates in physics have helped make this possible. Using fundamental concepts and methods from physics, they have developed technologies that use structures in networks to process information.

• Machine learning differs from traditional software, which works like a type of recipe. The software receives data, which is processed according to a clear description and produces the results, much like when someone collects ingredients and processes them by following a recipe, producing a cake.

• Instead of this, in machine learning the computer learns by example, enabling it to tackle problems that are too vague and complicated to be managed by step by step instructions. One example is interpreting a picture to identify the objects in it.

Artificial neural networks

• An artificial neural network (ANN) processes information using the entire network structure. The inspiration initially came from the desire to understand how the brain works.

• Inspired by biological neurons in the brain, ANNs are large collections of “neurons”, or nodes, connected by “synapses”, or weighted couplings, which are trained to perform certain tasks rather than asked to execute a predetermined set of instructions.

• The network is trained, for example, by developing stronger connections between nodes with simultaneously high values.

• At the end of the 1960s, some discouraging theoretical results caused many researchers to suspect that these neural networks would never be of any real use. However, interest in artificial neural networks was reawakened in the 1980s, when several important ideas made an impact, including work by this year’s laureates.

Hopfield network

• John Hopfield invented a network that uses a method for saving and recreating patterns. We can imagine the nodes as pixels. The Hopfield network utilises physics that describes a material’s characteristics due to its atomic spin — a property that makes each atom a tiny magnet.

• The network as a whole is described in a manner equivalent to the energy in the spin system found in physics, and is trained by finding values for the connections between the nodes so that the saved images have low energy.

• When the Hopfield network is fed a distorted or incomplete image, it methodically works through the nodes and updates their values so the network’s energy falls. The network thus works stepwise to find the saved image that is most like the imperfect one it was fed with.

Boltzmann machine

• Geoffrey Hinton used the Hopfield network as the foundation for a new network that uses a different method: the Boltzmann machine. This can learn to recognise characteristic elements in a given type of data.

• Hinton used tools from statistical physics, the science of systems built from many similar components. The machine is trained by feeding it examples that are very likely to arise when the machine is run.

• The Boltzmann machine can be used to classify images or create new examples of the type of pattern on which it was trained. Hinton has built upon this work, helping initiate the current explosive development of machine learning.

Machine learning’s areas of application

• Thanks to their work from the 1980s and onward, John Hopfield and Geoffrey Hinton have helped lay the foundation for the machine learning revolution that started around 2010.

• The development we are now witnessing has been made possible through access to the vast amounts of data that can be used to train networks, and through the enormous increase in computing power.

• Today’s artificial neural networks are often enormous and constructed from many layers. These are called deep neural networks and the way they are trained is called deep learning.

• Many researchers are now developing machine learning’s areas of application. Which will be the most viable remains to be seen, while there is also wide-ranging discussion on the ethical issues that surround the development and use of this technology.

• Because physics has contributed tools for the development of machine learning, it is interesting to see how physics, as a research field, is also benefitting from artificial neural networks. Machine learning has long been used in areas we may be familiar with from previous Nobel Prizes in Physics.

• These include the use of machine learning to sift through and process the vast amounts of data necessary to discover the Higgs particle.

• Other applications include reducing noise in measurements of the gravitational waves from colliding black holes, or the search for exoplanets.

• In recent years, this technology has also begun to be used when calculating and predicting the properties of molecules and materials — such as calculating protein molecules’ structure, which determines their function, or working out which new versions of a material may have the best properties for use in more efficient solar cells.

Manorama Yearbook app is now available on Google Play Store and iOS App Store