- India

- Nov 09

Explainer - What are deepfakes and synthetic media?

The Centre has issued an advisory to major social media companies to identify misinformation, deepfakes and other content that violates rules and remove those within 36 hours after being reported to them.

The development comes after a deepfake video of actress Rashmika Mandanna was found circulating on social media platforms earning criticism from several politicians and celebrities.

Union Minister Rajeev Chandrasekhar said that deepfakes are a major violation and harm women in particular.

What is synthetic media?

• Synthetic media is a general term used to describe text, images, videos or voice that has been generated using artificial intelligence (AI). It has the capability to alter the landscape of misinformation.

• As with many technologies, synthetic media techniques can be used for both positive and malicious purposes.

• The most substantial threats from the abuse of synthetic media include techniques that threaten an organisation’s brand, impersonate persons, and use fraudulent communications to enable access to an organisation’s networks, communications, and sensitive information.

What is deepfake?

• Manipulation of images is not new. But over recent decades digital recording and editing techniques have made it far easier to produce fake visual and audio content, not just of humans but also of animals, machines and even inanimate objects.

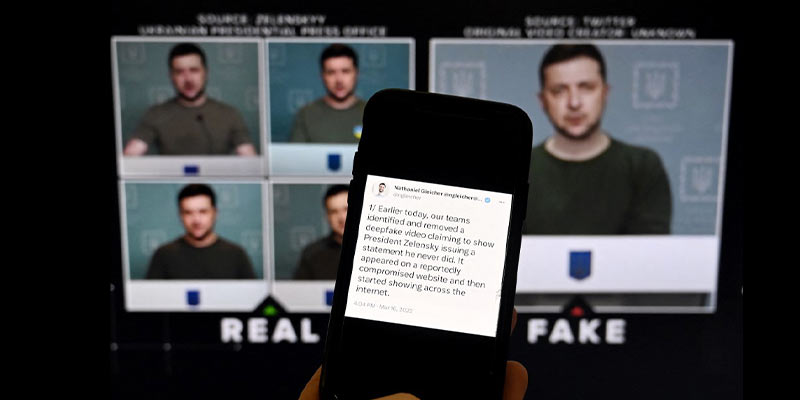

• Deepfakes are a particularly concerning type of synthetic media that utilises AI to create believable and highly realistic media.

• A deepfake is a digital photo, video or sound file of a real person that has been edited to create an extremely realistic but false depiction of them doing or saying something that they did not actually do or say.

• A deepfake is a digital forgery created through “deep learning” (a subset of AI).

• Advances in artificial intelligence (AI) have taken the technology even further, allowing it to rapidly generate content that is extremely realistic, almost impossible to detect with the naked eye and difficult to debunk.

• The term “deepfakes” is derived from the fact that the technology involved in creating this particular style of manipulated content (or fakes) involves the use of deep learning techniques. Deep learning represents a subset of machine learning techniques which are themselves a subset of artificial intelligence.

• One of the most common techniques for creating deepfakes is the face swap. There are many applications that allow a user to swap faces.

• Another deepfake technique is “lip syncing”. It involves mapping a voice recording from one or multiple contexts to a video recording in another, to make the subject of the video appear to say something authentic. Lip synching technology allows the user to make their target say anything they want.

• Another technique allows for the creation of “puppet-master” deepfakes, in which one person’s (the master’s) facial expression and head movements are mapped onto another person (the puppet).

• Using deepfakes to target and abuse others is not simply a technology problem. It is the result of social, cultural and behavioural issues being played out online.

• The threat of deepfakes and synthetic media comes not only from the technology used to create it, but from people’s natural inclination to believe what they see.

Generative Adversarial Networks (GANs)

• A key technology leveraged to produce deepfakes and other synthetic media is the concept of Generative Adversarial Network (GAN). In a GAN, two machine learning networks are utilized to develop synthetic content through an adversarial process.

• The first network is the “generator.” Data that represents the type of content to be created is fed to this first network so that it can ‘learn’ the characteristics of that type of data.

• The generator then attempts to create new examples of that data which exhibit the same characteristics of the original data. These generated examples are then presented to the second machine learning network, which has also been trained (but through a slightly different approach) to ‘learn’ to identify the characteristics of that type of data.

• This second network (the “adversary”) attempts to detect flaws in the presented examples and rejects those which it determines do not exhibit the same sort of characteristics as the original data – identifying them as “fakes”.

• These fakes are then ‘returned’ to the first network, so it can learn to improve its process of creating new data. This back and forth continues until the generator produces fake content that the adversary identifies as real.

• The concept was initially developed by Ian Goodfellow and his colleagues in June 2014.

• In machine learning, a model uses training data to develop a model for a specific task. The more robust and complete the training data, the better the model gets. In deep learning, a model is able to automatically discover representations of features in the data that permit classification or parsing of the data. They are effectively trained at a “deeper” level.

Emerging trends in deepfakes

• The possibilities for misuse are growing exponentially as digital distribution platforms become more publicly accessible and the tools to create deepfakes become relatively cheap, user-friendly and mainstream.

• Deepfakes have the potential to cause significant damage. They have been used to create fake news, false pornographic videos and malicious hoaxes, usually targeting well-known people such as politicians and celebrities. Potentially, deepfakes can be used as a tool for identity theft, extortion, sexual exploitation, reputational damage, ridicule, intimidation and harassment.

• A deepfake video showed Ukrainian President Volodomyr Zelenskyy telling his country to surrender to Russia. Several Russian TV channels and radio stations were hacked and a purported deepfake video of President Vladimir Putin was aired claiming he was enacting martial law due to Ukrainians invading Russia.

• In 2019, deepfake audio was used to steal $243,000 from a UK company and, more recently, there has been a massive increase in personalised AI scams given the release of sophisticated and highly trained AI voice cloning models.

• Dynamic trends in technology development associated with the creation of synthetic media will continue to drive down the cost and technical barriers in using this technology for malicious purposes. By 2030 the generative AI market is expected to exceed $100 billion, growing at a rate of more than an average of 35 per cent per year.

• Earlier, making a sophisticated fake with specialised software could take a professional days to weeks to construct. But now, these fakes can be produced in a fraction of the time with limited or no technical expertise.

• This is largely due to advances in computational power and deep learning, which make it not only easier to create fake multimedia, but also less expensive to mass produce.

• In addition, the market is now flooded with free, easily accessible tools (some powered by deep learning algorithms) that make the creation or manipulation of multimedia essentially plug-and-play.

• As a result, these publicly available techniques have increased in value and become widely available tools for adversaries of all types, enabling fraud and disinformation to exploit targeted individuals and organisations.

Manorama Yearbook app is now available on Google Play Store and iOS App Store